Training Data

Guide to Testing User Attributes for False Positive and Negatives

The write up serves to be a guide on how to test new user attributes. We will discuss the importance of testing for false positives and negatives, the process of testing, and how to draw conclusions based on the obtained ratios.

Importance of Testing

As Vatico continues to launch new features and expand its services, the addition of user attributes becomes essential. These attributes added must be 100% accurate to ensure smooth operations and avoid negative consequences, such as incorrect tax payments or wasteful spending.

When to Test

While there is no hard and fast rule as to when to carry out such testing, some of the situations where testing is necessary are listed below:

1. Creating new attribute that will be used to for other models

If an attribute is created wrongly right from the start, there will be many errors that can occur later due to this error, resulting in confusion and double work. Thus it is vital that new attributes are correct the moment they are rolled out.

2. Adjusting current attribute to meet increasing need

This could occur due to either changes in partner information which affect our logic (such as changing status names) or lapses in current logic. In such situations, it is likely that other models already rely on the attributes, thus it is even more important to perform testing because if an error occurs, it will cause even more errors downstream. Errors downstream may not even be noticed especially if the user is not aware of the changes made.

Definition of Key Terms

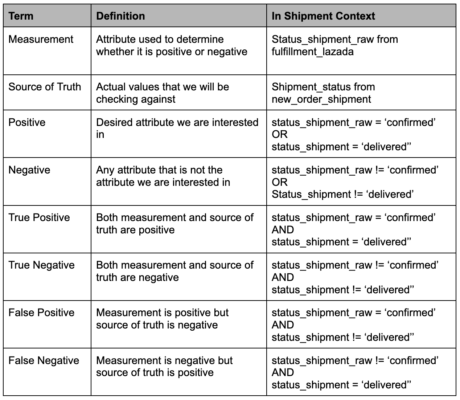

From this point onwards, we will be using the testing of false positives and negatives for shipment status as an example to showcase how testing is done.

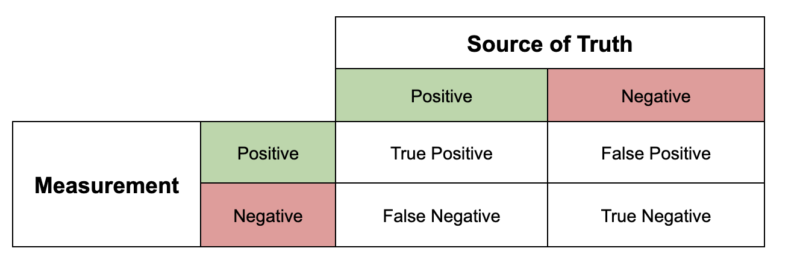

Figure 1: Metric Used for Testing

Figure 2: Table showing key terms and definitions

After understanding the key terms involved, we will now move on to the steps involved

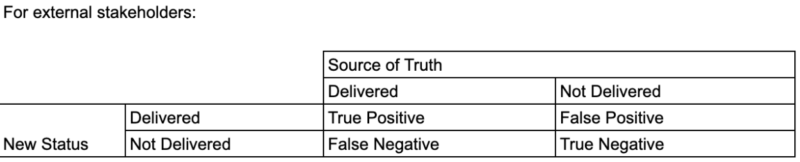

Step 1: Creating Metrics for External Stakeholders

For external stakeholders, it is essential to present the testing results in a straightforward manner. A metric will follow the format of Figure 1 above. Using non-technical language, this metric allows stakeholders to understand the accuracy of the attributes without delving into complex details.

Figure 3: Example of Metric for external stakeholders

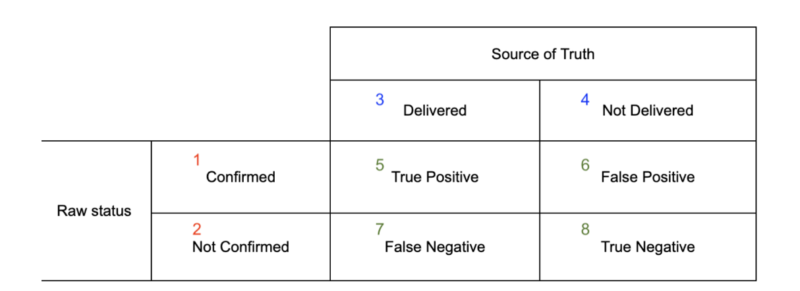

Step 2: Creating Metrics for Internal DA Testing

Internally, DA requires a more detailed metric, specifying the models used and defining positive and negative outcomes. For instance, the “delivered” status can be a positive for raw status, while multiple statuses can be grouped as not “delivered.”

The box numbering system should also be used to track progress and facilitate easy discussions on testing results.

Figure 4: Internal testing metric with numbers

Step 3: Ensuring Comparable Data Sets

To draw meaningful conclusions, it is vital to have similar numbers of positive and negative data items in the datasets.

SQL queries should be used to obtain counts for each box, removing duplicates and irrelevant data (such as filtering by date). While achieving complete parity may not always be feasible, efforts should be made to reduce discrepancies. Each box need not have its own code, as long as the logic used to get count is accurate and positive and negative count can be obtained, it will be sufficient.

For eg. there is no need to regroup not ‘delivered’ status into one group, can be left as multiple and added manually after as it may be simpler.

In the event there are large discrepancies even after trying to reduce them, be sure to ask the team for assistance.

Step 4: Obtaining Ratios and Drawing Conclusions

With comparable datasets, the ratios of true positive and negative can be calculated for each box. For each box, relevant SQL query should also be documented down.

If the attributes exhibit 100% true positive and negative, it indicates the accuracy needed to proceed confidently.

If inaccuracies exist, a case-by-case evaluation is required. Issues causing false positives and negatives should be addressed and resolved. For eg, if the source of truth is actually not updated, we would need to create the relevant tickets to update it. If you are unsure of how to resolve the issues, be sure to ask team for assistance.